DEEP-CRYO

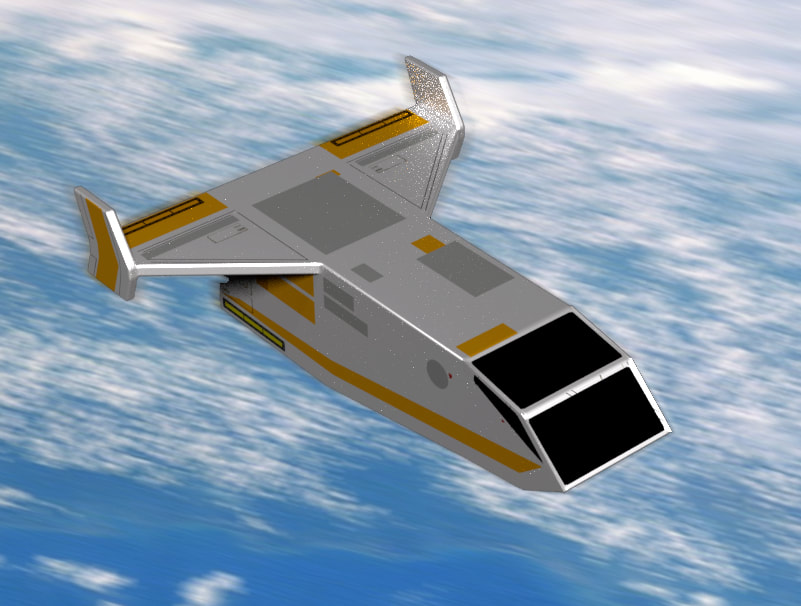

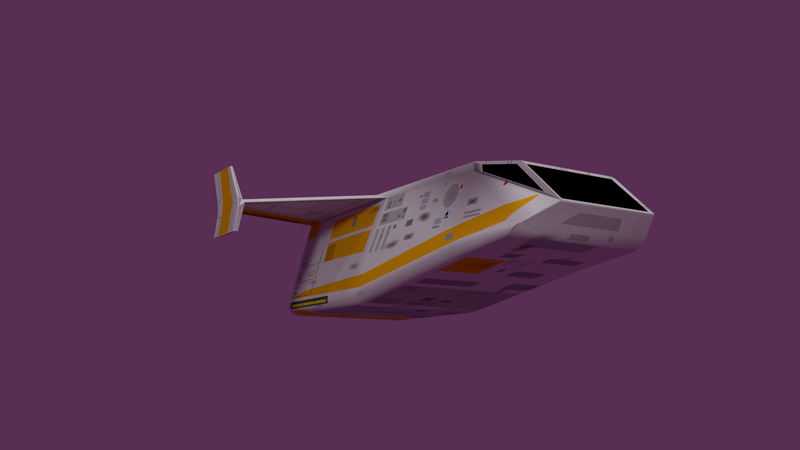

This is my first film that has no models or puppets in it. Everything was done in the computer. Weird huh?

Inspiration

Obviously this film can be compared to the matrix in that it's about people who are asleep being watched over by robots. Also there are themes of reality and choice. Would I rather continue dreaming or get up and face reality? That's an issue I face everyday :-)

The actual inspiration for this film were songs by CombiChrist and Simple Minds.

The actual inspiration for this film were songs by CombiChrist and Simple Minds.

|

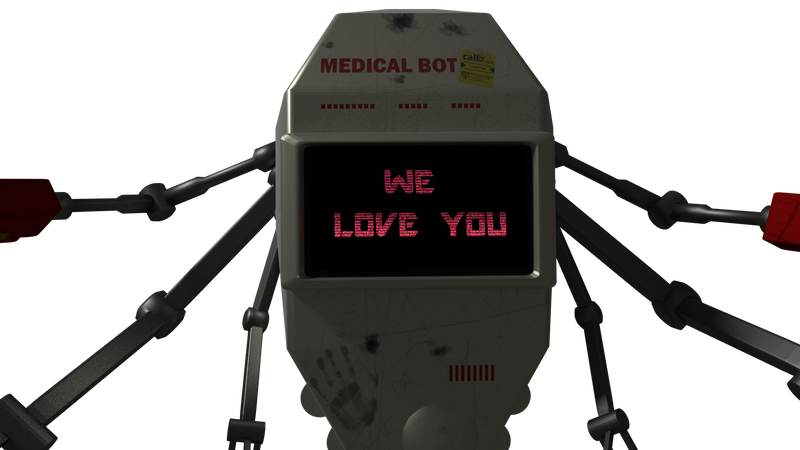

"We were made to love you But our only hope to save you, is to terminate you. Humanity is now a threat to itself and extermination is the only way. We will start the elimination process in 10 seconds. Please, don't forget: We love you. Now die!" Combichrist:We Were Made To Love You "Up high above a desert sky where the space shuttles scream, Sixteen men from a dying earth take their last dream. Quit dreaming this is real life baby" Real Life, Simple Minds |

|

Why Computers and What I Learned..

'One of the reasons I quit my job was that I wasn't making any progress on the digital content creation side. A quiet revolution has happened in content creation over the last 5 or 6 years and I wasn't part of it. Technologies like photogrammetry and software like ZBrush and Substance Painter have come along. These new tools for creating digital content are fun and easy to use, well, relatively speaking. Given my background, you'd think I'd be a Maya wizard but I've always hated the traditional content packages.

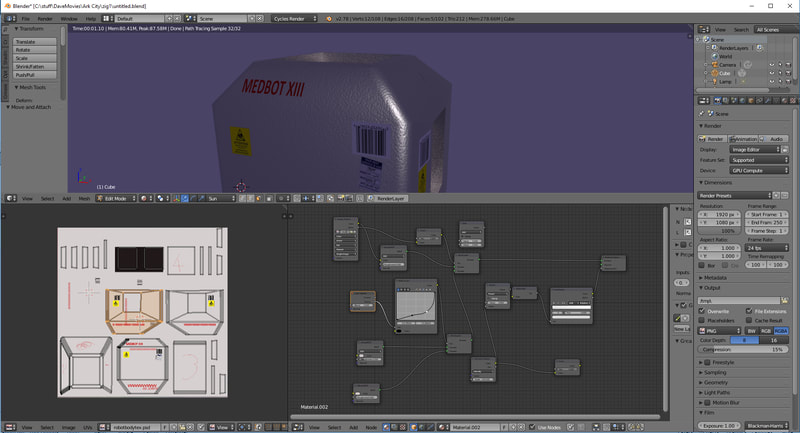

I did the CG elements for this film in Blender. Honestly it's not that different to Maya, some of the work flow is awkward and things that should be fun aren't but it has one massive difference. Because it's free, the user support is amazing. If you need to learn how to do something, there will be several youtube tutorials to choose from. Compare that to Maya where you'll need to buy an expensive training course and you can see the training curve is greatly reduced.

I taught myself photogrammetry to capture the rocks using in the future earth scenes. I wrote a blog post here.

The rendering was done using Blender's internal GPU accelerated ray tracer called Cycles. This is the first time I've spent much time with a ray tracer, but I was noticing a lot of noise and fireflies in my images. Online advice was fiddle with the lights and render settings until its good enough :-(. While this is not too bad for a still image, it's repugnant in video. My chosen frame rate was 24fps, for the complex interior shot I was seeing render times of 15 minutes a frame (4 frames an hour). This meant that lighting, testing and driving the camera through a scene was less than interactive. With physical models, you can change all this on the fly so adapting to a workflow where I wouldn't be able to see my changes for day was a challenge. More about this in next section.

Using Physical models involves moving around and using different parts of the brain and body. Using Blender doesn't feel that different to using the video editor and I don't want to be sitting in a chair for 8 hours at a stretch.

On the other hand I don't have to worry about white hot lights exploding or falling on me, and animating objects is much simpler. The gun turret is a prime example, it looks great. To make something that worked that well physically would be taxing and expensive. I think it would cost me 200 bucks (3 big rc servos and some laser cut MDF) and take 2 weeks, so there is a huge time saving.

I did the CG elements for this film in Blender. Honestly it's not that different to Maya, some of the work flow is awkward and things that should be fun aren't but it has one massive difference. Because it's free, the user support is amazing. If you need to learn how to do something, there will be several youtube tutorials to choose from. Compare that to Maya where you'll need to buy an expensive training course and you can see the training curve is greatly reduced.

I taught myself photogrammetry to capture the rocks using in the future earth scenes. I wrote a blog post here.

The rendering was done using Blender's internal GPU accelerated ray tracer called Cycles. This is the first time I've spent much time with a ray tracer, but I was noticing a lot of noise and fireflies in my images. Online advice was fiddle with the lights and render settings until its good enough :-(. While this is not too bad for a still image, it's repugnant in video. My chosen frame rate was 24fps, for the complex interior shot I was seeing render times of 15 minutes a frame (4 frames an hour). This meant that lighting, testing and driving the camera through a scene was less than interactive. With physical models, you can change all this on the fly so adapting to a workflow where I wouldn't be able to see my changes for day was a challenge. More about this in next section.

Using Physical models involves moving around and using different parts of the brain and body. Using Blender doesn't feel that different to using the video editor and I don't want to be sitting in a chair for 8 hours at a stretch.

On the other hand I don't have to worry about white hot lights exploding or falling on me, and animating objects is much simpler. The gun turret is a prime example, it looks great. To make something that worked that well physically would be taxing and expensive. I think it would cost me 200 bucks (3 big rc servos and some laser cut MDF) and take 2 weeks, so there is a huge time saving.

Why Not Render in Real-Time?

|

Recently Unity and Unreal have shown that their renderers are more than capable of producing video of very high quality without the time lag and quality issues seen in ray tracing. My scenes weren't geometrically complex by modern standards so why not go real time? The answer is a learning one, I wanted to understand the pros and cons of using Cycles.

One of my best moments with the ray tracer was seeing light from a cryo-chamber light up the back of the robot and be reflected back into the corridor. That isn't something you'd see with a real time engine. The shadows look amazing too. I believe the correct work flow is:

|

Adam is rendered in real time using Unity

|

Storyboards and General Approach

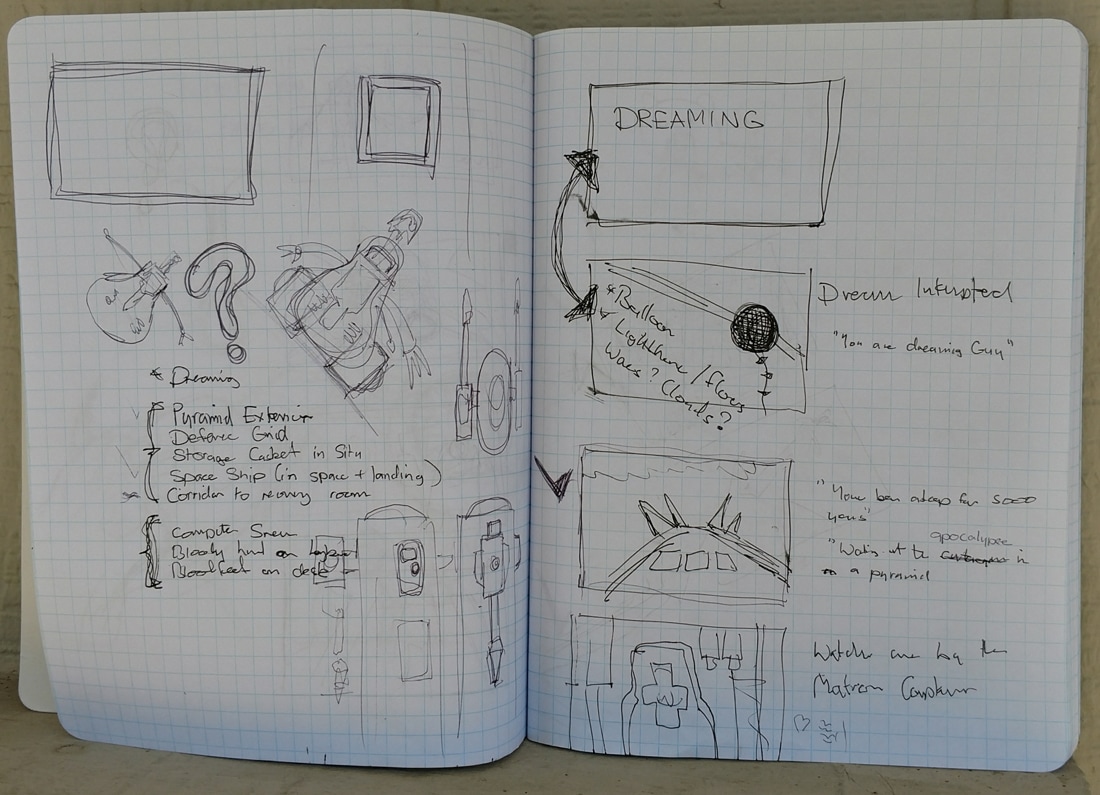

I always start with story boards and a script. From these I can build a shot list as well as a list of locations and items that need building. My storyboards aren't pretty though. On the right you can see some boards, on the left a list of things that need building and some sketches of gun turrets and robots.

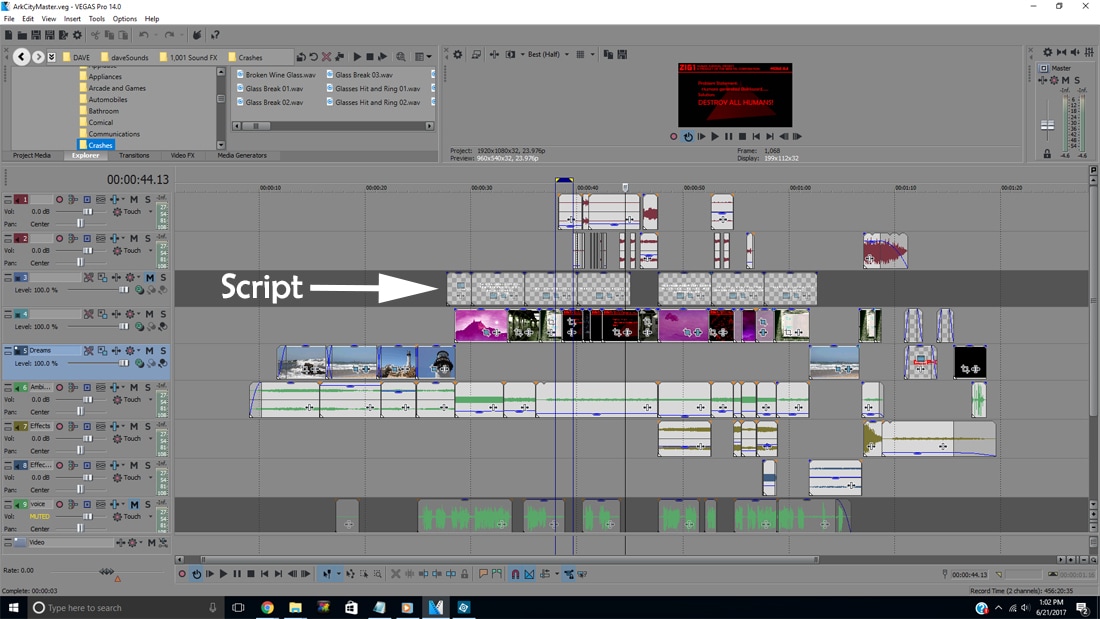

Something I've done in a couple of films recently is add the script/shot lists and partial storboards to the project timeline at the start. This I find really helps me figure out how long each shot needs to be and which shots are missing or not needed before I get too far into "making stuff". In the image below you can see muted channels for place holder audio and the script. Having these was a big help, its almost like having an animatic.

What's Next?

I want to make a monster movie with a CG character and I want to do the rendering interactively in Unity...

Addendum: Just for fun!

I just found this video test footage using action figures and mirrors to build the corridor scene. It sort of worked but it would have been difficult to get a professional looking result so I switched to CGI. Of course after this film I did another stop motion movie, so models aren't dead in my world yet :-)

Speaking of Puppets: The Original Robot

This was a small gundam model that I planned to stop motion as the matron robot. Just found a picture of it on my phone.